Last month, as part of my work, I got a chance to attend TRB’s 2nd Annual Workshop on Vehicle Automation, held at Stanford University. It had a lot of interesting presentations and discussions, and almost all of the material is available at the above website. As part of the workshop, they had several demonstration vehicles, including one of Google’s cars, which my colleague got a chance to ride in, and a very similar vehicle from Bosch, which I got a chance to ride in.

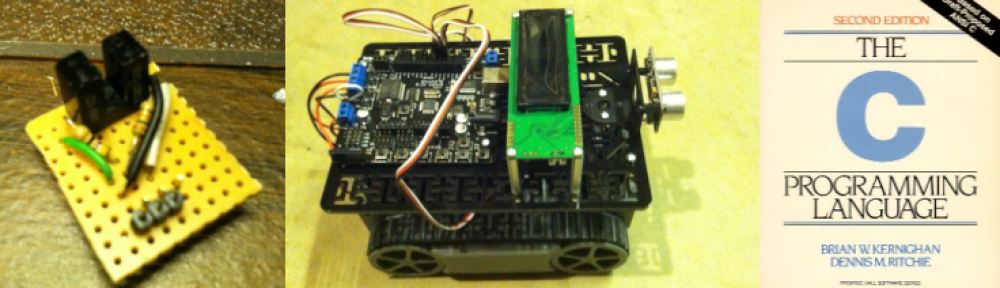

The Bosch vehicle is very similar to everything I’ve seen and heard about the more well-known Google vehicles. It has a number of both forward, rear, and side looking radars, as well as the LIDAR on the roof. The LIDAR and very accurate GPS are very expensive sensors, and not expected to drop to what’s needed for production vehicles. Bosch’s research plan is to transition to a more cost-effective sensor suite over the next several years. It was fascinating to watch the real-time display of what the LIDAR and radars were seeing as we drove. One thing I found interesting is that the vehicle was often able to “see” several cars ahead. Here’s a close up of the LIDAR system:

For the demo, the human driver drove the vehicle out onto the freeway and then engaged the automation features. The vehicle then steered itself, staying within the lane, and kept it’s speed. When a slower vehicle pulled in front, the vehicle automatically checked the lane to the left and then switched to the left lane in order to maintain the desired set speed. VERY impressive!

A couple of notes: at one point the vehicle oscillated very slightly within the lane, all the while staying well within the lane, sort of what a new driver might sometimes do. I thought it might be the tuning in the control algorithm and asked about it, but the researcher believed it was actually a slight wobble in the prescribed path on the electronic map, although he was going to have to look at the details after the conference to confirm this. Also, when a car pulled in front of us with a rather short separation distance, the vehicle braked harder than it probably needed to, which IS just a matter of getting the tuning right. Other than the hard braking, it felt very comfortable and normal.

This was actually my third demo ride in an automated vehicle. The first was in Demo ’97, as part of the Automated Highway System program. That was very impressive for it’s time, but the demo took place on a closed off roadway, rather than in full normal traffic on an open public freeway, like the Bosch demo.In addition, the vehicle control systems and sensors were far less robust, relying on permanent magnets in the roadway for navigation. Even then, there was work going on with vision systems, but the computing power wasn’t quite there yet. In 2000, I rode in a university research vehicle that used vision systems around a test track at the Intelligent Transport Systems World Congress in Turin, Italy. That system, while it used vision rather than magnets, was, while again a great step forward, far from robust. Today’s systems, if they can get the cost down, seem well on the path to commercial sale.

While Google executives have talked about vehicles with limited self-driving being sold before 2020, most other companies were talking about the mid 2020’s. This isn’t for a vehicle that can totally drive itself anywhere, which is the long-term dream, but rather for a vehicle that can often drive itself and can totally take over for long stretches of the roadway. The National Highway Traffic and Safety Administration (NHTSA) has a very useful five-level taxonomy for levels of automation:NHTSA defines vehicle automation as having five levels:

- No-Automation (Level 0): The driver is in complete and sole control of the primary vehicle controls – brake, steering, throttle, and motive power – at all times.

- Function-specific Automation (Level 1): Automation at this level involves one or more specific control functions. Examples include electronic stability control or pre-charged brakes, where the vehicle automatically assists with braking to enable the driver to regain control of the vehicle or stop faster than possible by acting alone.

- Combined Function Automation (Level 2): This level involves automation of at least two primary control functions designed to work in unison to relieve the driver of control of those functions. An example of combined functions enabling a Level 2 system is adaptive cruise control in combination with lane centering.

- Limited Self-Driving Automation (Level 3): Vehicles at this level of automation enable the driver to cede full control of all safety-critical functions under certain traffic or environmental conditions and in those conditions to rely heavily on the vehicle to monitor for changes in those conditions requiring transition back to driver control. The driver is expected to be available for occasional control, but with sufficiently comfortable transition time. The Google car is an example of limited self-driving automation.

- Full Self-Driving Automation (Level 4): The vehicle is designed to perform all safety-critical driving functions and monitor roadway conditions for an entire trip. Such a design anticipates that the driver will provide destination or navigation input, but is not expected to be available for control at any time during the trip. This includes both occupied and unoccupied vehicles.

Level 2 systems have already been announced as coming into production by several automakers within the next 5 years. Level 3 by the mid 2020’s is the stated goal of several companies. Full automation (the truly autonomous vehicle with no driver required) is still the stuff of science fiction, but where a lot of really interesting effects on society develop.

Here’s a short 3 minute Bosch video on their vehicle and their research: